Some of those who leave their job are said to be experiencing symptoms similar to post-traumatic stress disorder.

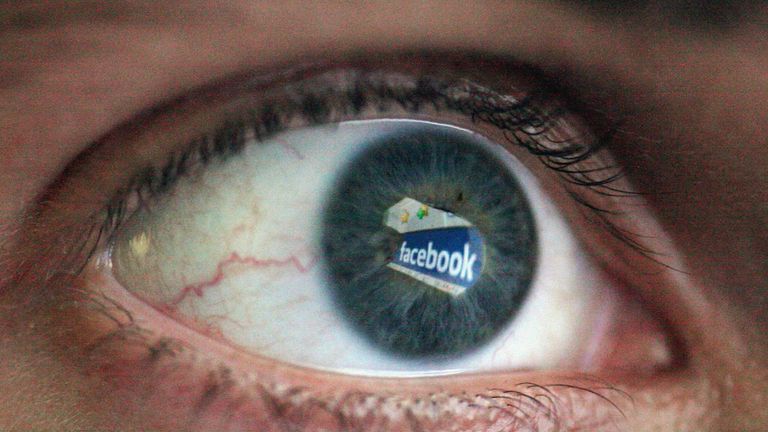

Content moderators at Facebook are being exposed to so much traumatic material they are smoking cannabis at work and others have "trauma bonded" by having sex on the job, a report claims.

Although 15,000 people around the world are employed to check content uploaded to Facebook, the conditions they work in are being kept hidden by third-party contracts and non-disclosure agreements, according to an investigation by technology news site The Verge.

In a dozen interviews with current and former employees of the third-party company Cognizant, The Verge said that Facebook's content moderators were experiencing traumatic conditions, suffering panic attacks after watching videos of murders, terrorism, and child abuse.

In January, Facebook posted record profitsof $6.9bn (£5.3bn) after a year of scandals plagued the social media giant.

Content moderators in the US make just over 10% ($28,000 or £21,000) of what the average Facebook employee earns ($240,000 or £180,000) per year, and the report claims they do not have access to adequate mental health support, with none at all available to staff once they leave the job.

Employees can reportedly be fired for making "just a handful of errors a week" while those who remain are afraid of former colleagues seeking vengeance - with one current worker said to have brought a gun to work to protect himself.

Facebook has said there are "misunderstandings and accusations" about its content review practices and that it is committed to working with partner companies to make sure employees get a high level of support.

According to The Verge investigation, some of those who leave Cognizant are experiencing symptoms similar to post-traumatic stress disorder

Those who stay at the company tell dark jokes about killing themselves to cope with the material they have to view and smoke cannabis during their breaks to numb their emotions.

Others have reportedly been found having sex inside stairwells and in a room for breastfeeding mothers in what employee described as "trauma bonding".

Some of them told The Verge they had begun to sympathise with the fringe viewpoints of videos they have watched, including about the Earth being flat, or that 9/11 was the product of a conspiracy rather than a terror attack.

It comes as the UK government prepares to make social networks liable for the contenton their platforms, likely to mean such content reviewing will become more common..

MPs recently called for a code of ethics to ensure social media platforms remove harmful content from their sites and branded Facebook "digital gangsters" in a parliamentary report.

The committee wrote: "Social media companies cannot hide behind the claim of being merely a 'platform' and maintain that they have no responsibility themselves in regulating the content of their sites."

Responding to the story on Twitter in a personal capacity, Facebook's former head of security Alex Stamos agreed that the human cost of content reviewing was a direct result of these complaints that the company take responsibility for moderating content on their platform.

Mark Zuckerberg has regularly responded to criticism of content on Facebook by stating he will hire extra staff as content moderators.

The parliamentary committee which recommended the company take more responsibility for content on its platform did not reply to Sky News' request for a statement on The Verge's report.

Facebook responded to Sky News with a link to a blog post, which stated: "We know there are a lot of questions, misunderstandings and accusations around Facebook's content review practices - including how we as a company care for and compensate the people behind this important work.

"We are committed to working with our partners to demand a high level of support for their employees; that's our responsibility and we take it seriously."

The blog added: "Given the size at which we operate and how quickly we've grown over the past couple of years, we will inevitably encounter issues we need to address on an ongoing basis."

Facebook said mechanisms were in place at the companies it works with to ensure any concerns reported are "never the norm".

These include contracts that stipulate "good facilities, wellness breaks for employees, and resiliency support", as well as site visits where Facebook staff hold "1:1 conversations and focus groups with content reviewers".

Comments

Post a Comment